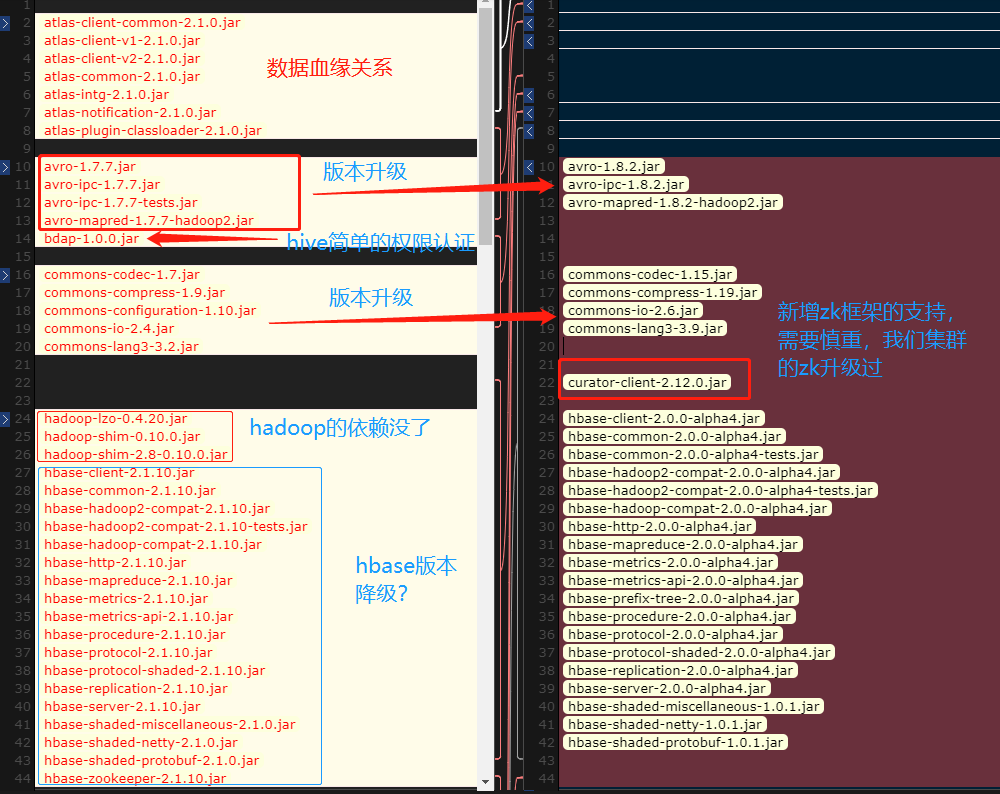

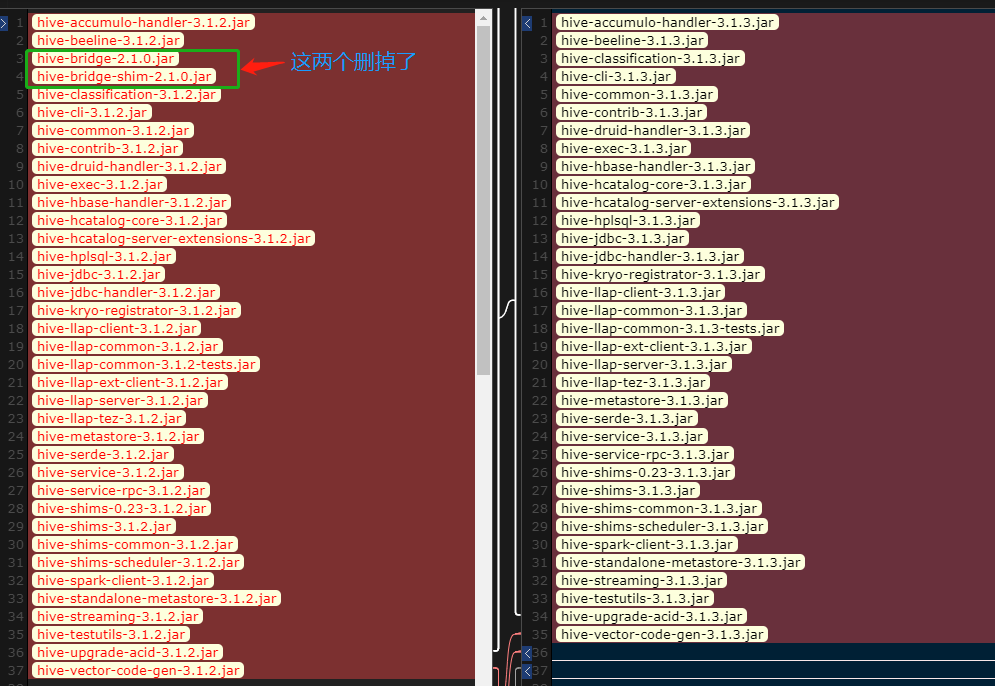

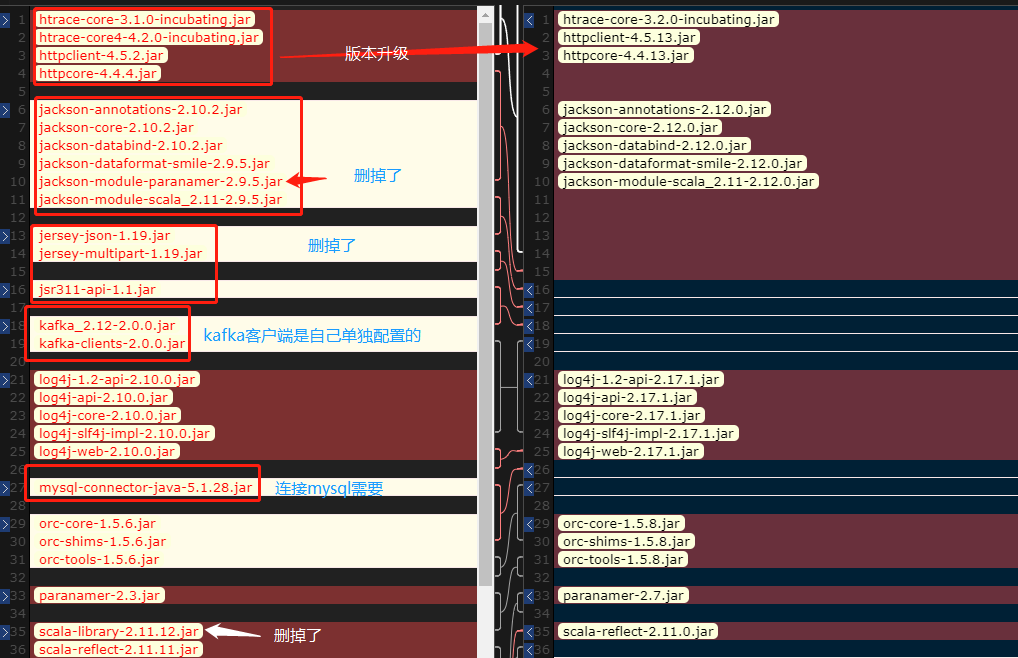

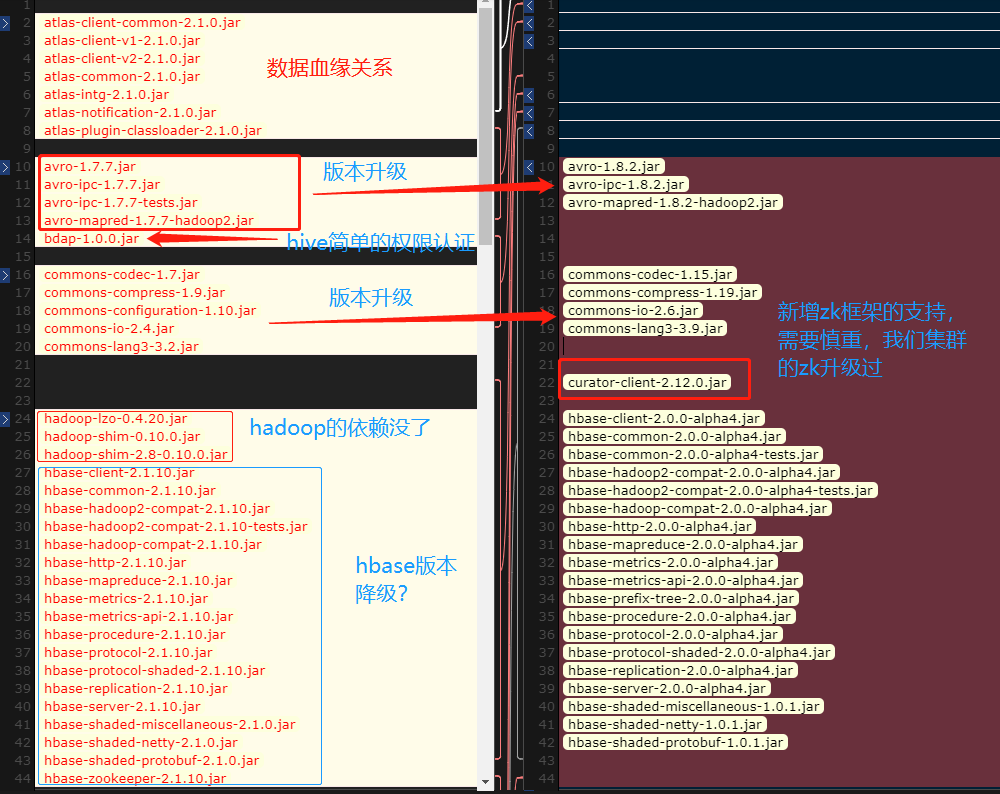

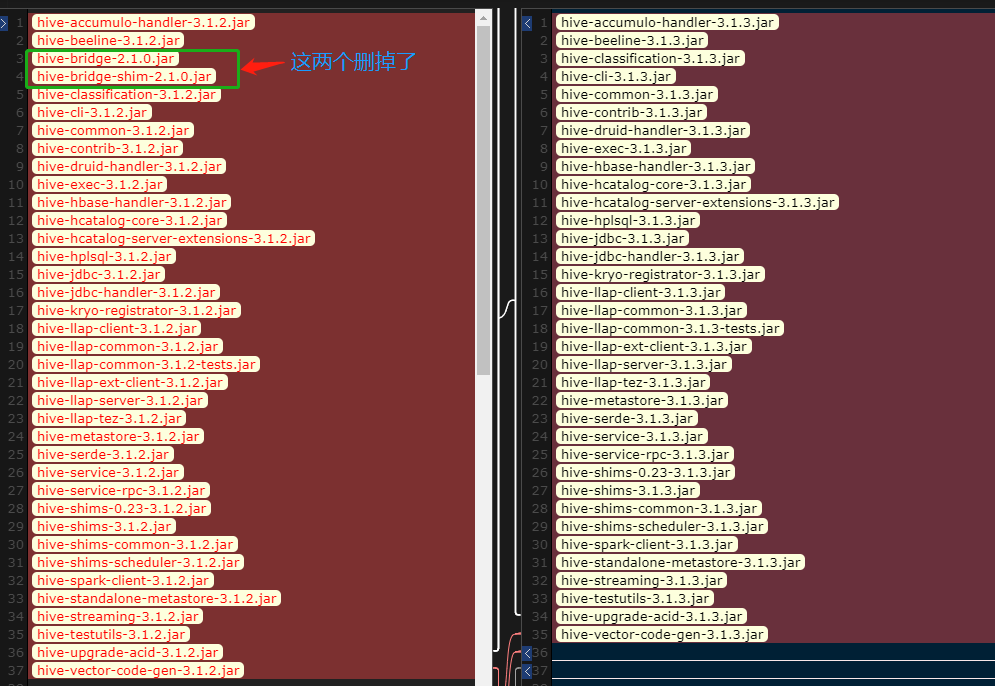

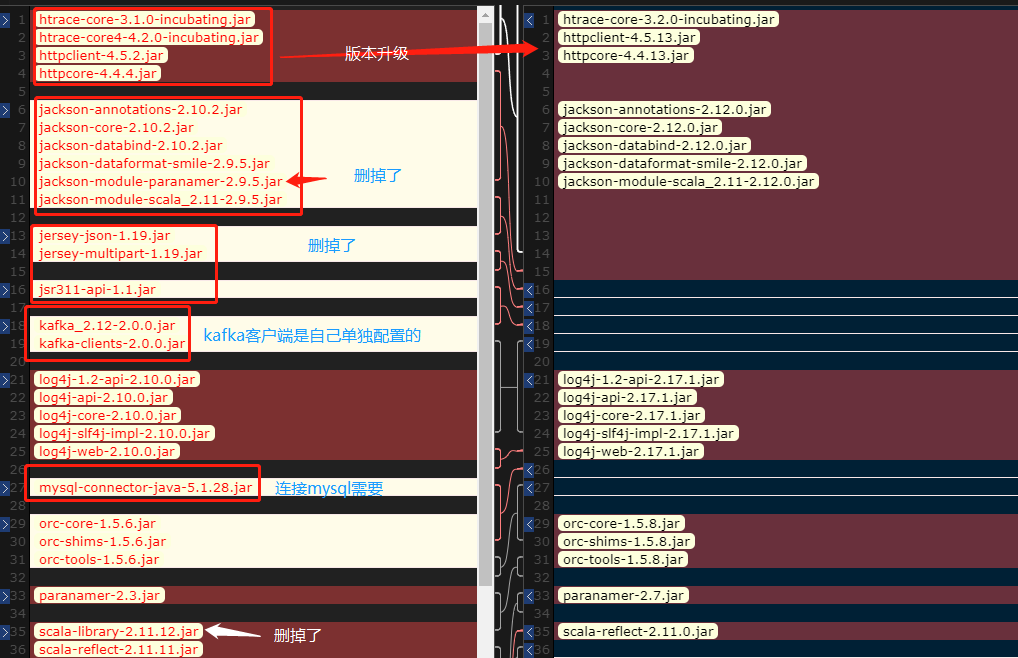

一、先对比两个版本中的jar包差异

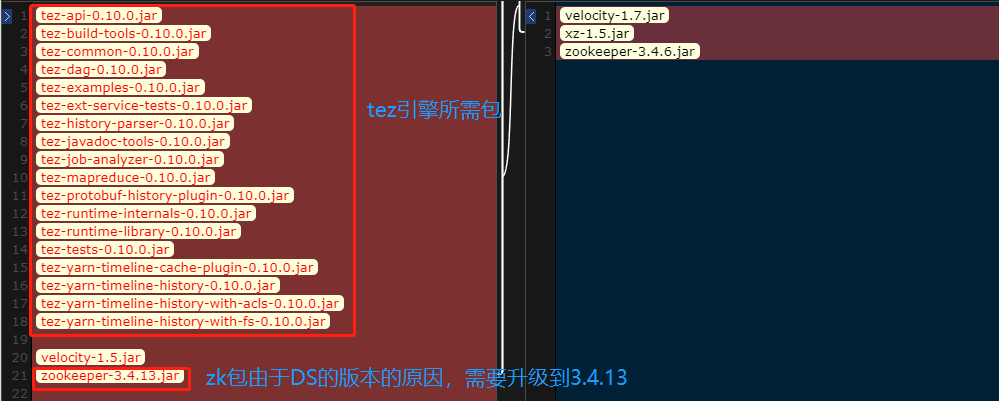

由于代码版本兼容的问题,需要记录好原来的包以及升级后的包之间的差异。比如jackson编解码问题,由于版本不同造成的编码错误。此外,DophinSchedur的版本升级,导致ZK必须要升级为3.4.13的版本。之前这些兼容问题,已经做过很多调试,本次升级仅仅是为了解决hive动态分区的问题,至于能否解决还需要看后续的情况。

二、修改配置文件

在hive/conf目录中,执行下面命令,先把文件名改为正式可用的文件名:

1

2

3

4

5

6

7

| mv beeline-log4j2.properties.template beeline-log4j2.properties

mv hive-exec-log4j2.properties.template hive-exec-log4j2.properties

mv hive-default.xml.template hive-default.xml

mv hive-log4j2.properties.template hive-log4j2.properties

mv hive-env.sh.template hive-env.sh

mv llap-cli-log4j2.properties.template llap-cli-log4j2.properties

mv llap-daemon-log4j2.properties.template llap-daemon-log4j2.properties

|

2.1 修改hive-log4j2.properties日志文件:

1

2

3

4

5

6

|

property.hive.log.dir = /var/log/udp/2.0.0.0/hive

appender.console.layout.pattern = %d{yyyy-MM-dd HH:mm:ss} %p %c{2}: %m%n

appender.DRFA.layout.pattern = %d{yyyy-MM-dd HH:mm:ss} %p %c{2}: %m%n

|

2.2 修改hive-exec-log4j2.properties日志文件

1

2

3

4

5

6

|

property.hive.log.dir = /var/log/udp/2.0.0.0/hive/

appender.console.layout.pattern = %d{yyyy-MM-dd HH:mm:ss} %p %c{2}: %m%n

appender.DRFA.layout.pattern = %d{yyyy-MM-dd HH:mm:ss} %p %c{2}: %m%n

|

2.3 修改hive-site.xml配置文件

我们先通过hive-default.xml复制一份hive-site.xml文件,注意引擎先选用MR。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

197

| <configuration>

<property>

<name>hive.server2.logging.operation.enabled</name>

<value>true</value>

</property>

<property>

<name>hive.server2.logging.operation.log.location</name>

<value>/var/log/udp/2.0.0.0/hive/operation_logs</value>

</property>

<property>

<name>hive.server2.logging.operation.level</name>

<value>VERBOSE</value>

</property>

<property>

<name>hive.server2.thrift.port</name>

<value>10000</value>

</property>

<property>

<name>hive.server2.thrift.bind.host</name>

<value>hadoop004</value>

</property>

<property>

<name>hive.server2.long.polling.timeout</name>

<value>5000</value>

</property>

<property>

<name>hive.metastore.warehouse.dir</name>

<value>/ahpthd/user/hive/warehouse/</value>

</property>

<property>

<name>hive.metastore.uris</name>

<value>thrift://hadoop002:9083,thrift://hadoop003:9083</value>

</property>

<property>

<name>hive.cluster.delegation.token.store.class</name>

<value>org.apache.hadoop.hive.thrift.MemoryTokenStore</value>

</property>

<property>

<name>hive.cli.print.header</name>

<value>true</value>

</property>

<property>

<name>hive.cli.print.current.db</name>

<value>true</value>

</property>

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://hadoop004:3306/db_hive_metastore?createDatabaseIfNotExist=true&useUnicode=true&characterEncoding=utf-8&useSSL=false</value>

</property>

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.jdbc.Driver</value>

</property>

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>root</value>

</property>

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>bigdata</value>

</property>

<property>

<name>hive.metastore.schema.verification</name>

<value>false</value>

</property>

<property>

<name>hive.server2.session.check.interval</name>

<value>60000</value>

</property>

<property>

<name>hive.server2.idle.session.timeout</name>

<value>3600000</value>

</property>

<property>

<name>hive.tez.session.timeout.interval</name>

<value>600000</value>

</property>

<property>

<name>hive.exec.failure.hooks</name>

<value>org.apache.hadoop.hive.ql.hooks.ATSHook</value>

</property>

<property>

<name>hive.exec.post.hooks</name>

<value>org.apache.hadoop.hive.ql.hooks.ATSHook</value>

</property>

<property>

<name>hive.exec.pre.hooks</name>

<value>org.apache.hadoop.hive.ql.hooks.ATSHook</value>

</property>

<property>

<name>spark.driver.memory</name>

<value>8G</value>

</property>

<property>

<name>spark.executor.memory</name>

<value>8G</value>

</property>

<property>

<name>spark.eventLog.enabled</name>

<value>true</value>

</property>

<property>

<name>spark.eventLog.dir</name>

<value>hdfs://ahpthd/ahpthd/spark-logs</value>

</property>

<property>

<name>hbase.zookeeper.quorum</name>

<value>hadoop001:2181,hadoop002:2181,hadoop003:2181</value>

</property>

<property>

<name>hive.execution.engine</name>

<value>mr</value>

</property>

<property>

<name>hbase.zookeeper.quorum</name>

<value>hadoop001:2181,hadoop002:2181,hadoop003:2181</value>

</property>

<property>

<name>hive.txn.manager</name>

<value>org.apache.hadoop.hive.ql.lockmgr.DbTxnManager</value>

</property>

<property>

<name>hive.compactor.initiator.on</name>

<value>true</value>

</property>

<property>

<name>hive.compactor.worker.threads</name>

<value>1</value>

</property>

<property>

<name>hive.support.concurrency</name>

<value>true</value>

</property>

<property>

<name>hive.enforce.bucketing</name>

<value>true</value>

</property>

<property>

<name>hive.in.test</name>

<value>false</value>

</property>

<property>

<name>hive.server2.enable.doAs</name>

<value>false</value>

</property>

<property>

<name>hive.exec.scratchdir</name>

<value>/ahpthd/tmp/hive</value>

</property>

<property>

<name>hadoop.zk.address</name>

<value>hadoop001:2181,hadoop002:2181,hadoop003:2181</value>

</property>

<property>

<name>hive.mapjoin.localtask.max.memory.usage</name>

<value>0.99</value>

</property>

<property>

<name>hive.auto.convert.join</name>

<value>false</value>

</property>

<property>

<name>hive.jdbc_passwd.auth.hadoop</name>

<value>root</value>

</property>

<property>

<name>hive.server2.authentication</name>

<value>CUSTOM</value>

</property>

<property>

<name>hive.server2.custom.authentication.class</name>

<value>org.apache.hadoop.hive.contrib.auth.CustomPasswdAuthenticator</value>

</property>

<property>

<name>hive.fetch.task.conversion</name>

<value>more</value>

</property>

<property>

<name>hive.local.time.zone</name>

<value>Asia/Shanghai</value>

</property>

<property>

<name>hive.insert.into.multilevel.dirs</name>

<value>true</value>

<description>允许生成多级目录</description>

</property>

</configuration>

|

2.4 jar包替换

增加:mysql连接包:mysql-connector-java-5.1.28.jar

替换:zk包:zookeeper-3.4.13.jar 版本从3.4.6升级为3.4.13

增加:简单授权jar包:hive-custom-auth-1.0-SNAPSHOT.jar 该包重写了bdap-1.0.0.jar

三、Hive on Tez配置

在hive的conf目录中增加tez-site.xml文件:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

| <?xml version="1.0" encoding="utf-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<property>

<name>tez.dag.recovery.enabled</name>

<value>true</value>

</property>

<property>

<name>tez.lib.uris</name>

<value>hdfs://ahpthd/tez-0.10.0</value>

</property>

<property>

<name>tez.am.resource.memory.mb</name>

<value>1024</value>

</property>

<property>

<name>hive.tez.java.ops</name>

<value>–server -Xms1024m -Xmx4096m –Djava.net.preferIPv4Stack=true–XX:NewRatio=8 –XX:+UseNUMA –XX:UseG1G</value>

</property>

<property>

<name>hive.auto.convert.join.noconditionaltask</name>

<value>true</value>

</property>

<property>

<name>hive.tez.auto.reducer.parallelism</name>

<value>true</value>

</property>

<property>

<name>tez.history.logging.service.class</name>

<value>org.apache.tez.dag.history.logging.ats.ATSHistoryLoggingService</value>

</property>

<property>

<name>tez.tez-ui.history-url.base</name>

<value>http://hadoop001:9999/tez/</value>

</property>

<property>

<name>tez.session.am.dag.submit.timeout.secs</name>

<value>60</value>

</property>

<property>

<name>tez.counters.max</name>

<value>1200</value>

</property>

<property>

<name>tez.counters.max.groups</name>

<value>500</value>

</property>

<property>

<name>tez.am.container.reuse.enabled</name>

<value>false</value>

</property>

</configuration>

|

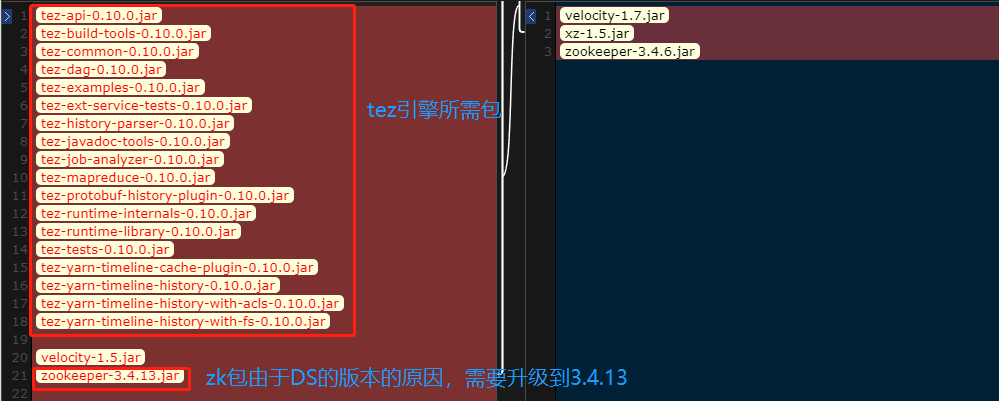

将tez的jar包复制到hive的lib包中,tez 0.10.0的包如下:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

| tez-api-0.10.0.jar

tez-build-tools-0.10.0.jar

tez-common-0.10.0.jar

tez-dag-0.10.0.jar

tez-examples-0.10.0.jar

tez-ext-service-tests-0.10.0.jar

tez-history-parser-0.10.0.jar

tez-javadoc-tools-0.10.0.jar

tez-job-analyzer-0.10.0.jar

tez-mapreduce-0.10.0.jar

tez-protobuf-history-plugin-0.10.0.jar

tez-runtime-internals-0.10.0.jar

tez-runtime-library-0.10.0.jar

tez-tests-0.10.0.jar

tez-yarn-timeline-cache-plugin-0.10.0.jar

tez-yarn-timeline-history-0.10.0.jar

tez-yarn-timeline-history-with-acls-0.10.0.jar

tez-yarn-timeline-history-with-fs-0.10.0.jar

|

修改hive-site.xml中hive引擎:

1

2

3

4

| <property>

<name>hive.execution.engine</name>

<value>tez</value>

</property>

|

配置好后,将hive整个安装目录复制到其他节点,可以使用xsync脚本。

四、启动hiveServer2和metastore

如果大数据使用的是hadoop用户,请使用hadoop用户来安装。

1

2

3

| hive --service metastore

hive --service hiveserver2

|